Custom Chatbot To Query PDF Documents Using OpenAI and Langchain

Custom chatbots are revolutionizing the way businesses interact with their customers. With the advancements in natural language processing and artificial intelligence, chatbots can now be tailored to specific business needs, making them more efficient and effective in handling customer queries.

Building a Custom Chatbot

When it comes to creating a custom chatbot to query PDF documents, there are several steps involved. First, you need to define the scope and purpose of the chatbot. What kind of queries will it handle? What information will it retrieve from the PDF documents?

Next, you will need to choose the right tools and technologies to build the chatbot. In this blog we are using libraries such as PyPDFLoader, OpenAIEmbeddings, and ChatOpenAI. These tools help in loading PDF documents, extracting text embeddings, and generating responses to user queries.

Step-by-Step Guide

Here is a step-by-step guide on how to build a custom chatbot to query PDF documents:

- Install Required Python Packages.

- Set up the necessary environment variables, such as the OpenAI API key.

- Load the PDF documents using the PyPDFLoader.

- Split the text into individual documents using a text splitter.

- Generate text embeddings using OpenAIEmbeddings.

- Create a prompt template for the chatbot to generate responses.

- Build a retrieval chain to fetch relevant information from the documents.

- User Input and Response Generation

Install Required Python Packages

The following libraries are required to run the code:

langchain_communitylangchain_openailangchainfaiss-cpu(only CPU version is needed for this code)langchain_text_splitters

You can install all the required libraries at once using the following command in your terminal:

$ pip install langchain_community langchain_openai langchain faiss-cpu langchain_text_splitters

Setting Environment Variables

The first step in setting up the environment is to configure the necessary environment variables. In the provided Python program, an important environment variable that needs to be set is the OpenAI API key. This key is essential for authentication and access to the OpenAI platform, which is used for generating responses to user queries.

import os

from langchain_community.document_loaders import PyPDFLoader

from langchain_openai import OpenAIEmbeddings

from langchain_openai import ChatOpenAI

from langchain.chains import create_retrieval_chain

from langchain_core.documents import Document

from langchain_community.vectorstores import FAISS

from langchain_core.prompts import ChatPromptTemplate

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.chains.combine_documents import create_stuff_documents_chain

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY" # Replace with your API keyLoading PDF Documents

Once the environment variables are set, the next step is to load the PDF documents that the chatbot will query. The PyPDFLoader library is used in the program to load the PDF documents efficiently. This step is crucial as it provides the chatbot with the necessary data to generate responses. The code snippet uses the PyPDFLoader class from langchain_community to load the PDF document named "50-questions.pdf". It stores the loaded document(s) in a variable called docs.

For a single PDF file

pdf_loader = PyPDFLoader('50-questions.pdf')

docs = pdf_loader.load()

For multiple PDF files

docs = []

for file in os.listdir("pdf_folder"):

pdf_path = "./pdf_folder/" + file

loader = PyPDFLoader(pdf_path)

docs.extend(loader.load())

Text Splitting and Embeddings

After loading the PDF documents, the text within the documents needs to be split into individual segments for processing. RecursiveCharacterTextSplitter and FAISS are essential tools in the field of natural language processing (NLP). The RecursiveCharacterTextSplitter efficiently breaks down large documents into smaller chunks, aiding in tasks like document summarization and chatbot interactions. Meanwhile, FAISS excels in similarity search and vector indexing, enabling rapid retrieval of relevant information from high-dimensional text embeddings. Together, these tools enhance text processing pipelines, offering speed and precision in tasks such as document analysis, information retrieval, and semantic search applications.

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings()

vector = FAISS.from_documents(documents, embeddings)Creating a Prompt Template

This part defines a template for prompting the large language model (LLM) with context and a question. The ChatPromptTemplate.from_template function is used to create a template with placeholders for context ({context}) and the user's input ({input}).

prompt = ChatPromptTemplate.from_template("""

Answer the following question based only on the provided context:

<context>

{context}

</context>

Question: {input}""")Building the Retrieval Chain

This part creates two chains:

- Retrieval Chain: This chain combines the document embedding vectors with the document chain to retrieve the most relevant document (question and context) for the user's input question.

- Document Chain: This chain uses the

ChatOpenAIclass (LLM) and the definedpromptto answer the user's question based on the context provided in each document.

retriever = vector.as_retriever()

document_chain = create_stuff_documents_chain(llm, prompt)

retrieval_chain = create_retrieval_chain(retriever, document_chain)

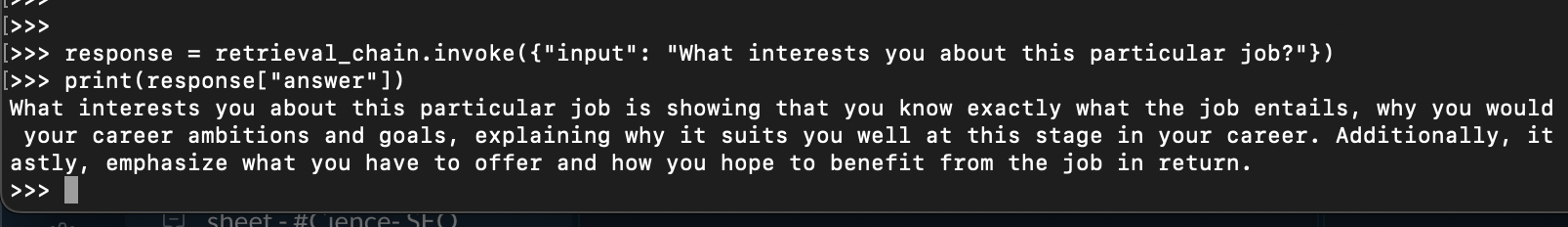

User Input and Response Generation

- The code prompts the user for an input question (

"What interests you about this particular job?"). - It then uses the

retrieval_chainto invoke the retrieval and answer generation process. The user's question is fed into the retrieval chain, which retrieves the most relevant document (question and context) from the loaded documents. - Finally, the context from the retrieved document is used with the user's question in the defined prompt to query the LLM for an answer. The answer generated by LLM is printed.

response = retrieval_chain.invoke({"input": "What interests you about this particular job?"})

print(response["answer"])

Here is the complete script to run on a local machine

import os

from langchain_community.document_loaders import PyPDFLoader

from langchain_openai import OpenAIEmbeddings

from langchain_openai import ChatOpenAI

from langchain.chains import create_retrieval_chain

from langchain_core.documents import Document

from langchain_community.vectorstores import FAISS

from langchain_core.prompts import ChatPromptTemplate

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain.chains.combine_documents import create_stuff_documents_chain

# Setting Up the Environment (Replace with your API key)

os.environ["OPENAI_API_KEY"] = "sk-YOUR_OPENAI_API_KEY"

# 1. Load the PDF Document

embeddings = OpenAIEmbeddings() # Create an object for generating embeddings

llm = ChatOpenAI() # Create an object to interact with the OpenAI API

pdf_loader = PyPDFLoader('50-questions.pdf')

docs = pdf_loader.load() # Load the questions from the PDF

# 2. Prepare the Chat Prompt Template

prompt = ChatPromptTemplate.from_template("""

Answer the following question based only on the provided context:

<context>

{context}

</context>

Question: {input}""")

# This template defines the format for prompting the LLM with context and a question.

# 3. Split the Text into Individual Questions

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

# This step splits the loaded documents (likely containing multiple questions) into separate documents, each containing a single question.

# 4. Create Document Embeddings

vector = FAISS.from_documents(documents, embeddings)

# This line generates dense vector representations (embeddings) for each question. These embeddings capture the semantic meaning of the text and help retrieve relevant questions.

# 5. Build the Retrieval Chain

retriever = vector.as_retriever()

document_chain = create_stuff_documents_chain(llm, prompt)

retrieval_chain = create_retrieval_chain(retriever, document_chain)

# Here, we create two chains:

# - Retrieval Chain: This retrieves the most relevant question and its context based on the user's input question using the document embeddings.

# - Document Chain: This chain uses the LLM to answer the user's question based on the retrieved context.

# 6. User Input and Response Generation

response = retrieval_chain.invoke({"input": "What interests you about this particular job?"})

print(response["answer"])

# This prompts the user for a question, retrieves the most relevant question and context from the document, and then uses the LLM to answer the user's question based on that context. Finally, it prints the LLM's generated answer.

This code demonstrates how to leverage OpenAI's large language model (LLM) to answer questions from a PDF document in a context-aware manner. Let's break down the steps:

- Setting Up: We import necessary libraries and set your OpenAI API key (replace with yours).

- Loading the PDF: The

PyPDFLoaderclass loads the questions from the "50-questions.pdf" file. - Chat Prompt Template: This defines a template for prompting the LLM, including placeholders for context (

{context}) and the user's question ({input}). - Splitting Text: The loaded documents (potentially containing multiple questions) are split into individual documents, each holding a single question.

- Creating Embeddings: Dense vector representations (embeddings) are generated for each question using

OpenAIEmbeddings. These embeddings capture the meaning of the text and help retrieve relevant questions. - Building the Retrieval Chain: This step involves creating two chains:

- Retrieval Chain: It retrieves the most relevant question and its context from the document based on the user's input question using the document embeddings.

- Document Chain: This chain uses the LLM to answer the user's question based on the retrieved context.

- User Input and Response: The code prompts the user for a question (e.g., "What interests you about this particular job?"). It then retrieves the most relevant question and context from the document using the retrieval chain. Finally, the retrieved context and the user's question are used to query the LLM for an answer. The LLM's generated answer is then printed.

Frequently Asked Questions

- How to build a LangChain PDF chatbot?

You can build a LangChain PDF chatbot by following these steps:

- Load the PDF document.

- Split the document into individual questions.

- Generate embeddings for each question to capture meaning.

- Use retrieval and LLM chains to answer user questions based on context.

- Requires Python and additional libraries (refer to specific guides for details).

- How do I make a chatbot chat with my PDF?

Currently, chatbots can't directly converse with PDFs. However, LangChain can provide context-aware answers to your questions about the PDF's content.

- Can I input a PDF into ChatGPT?

No, ChatGPT doesn't directly process PDFs. You can potentially copy and paste relevant text from the PDF or use tools like LangChain to make the PDF content accessible to ChatGPT.

- Can I use LangChain without OpenAI?

Yes, LangChain can function for information retrieval from documents without OpenAI. However, its question answering capabilities require LLM integration (OpenAI in this case). You can explore using open-source models from Hugging Face or alternatives like ollama, Mistral and Gemma.

- Does LangChain use GPT-4?

LangChain is flexible and can integrate with various LLMs. The specific example might use OpenAI's LLM, but it's not confirmed to be GPT-4.

- Can chatbot read PDFs?

Some chatbots can't directly "read" PDFs for conversation. However, tools like LangChain can process PDFs and answer questions based on their content, leveraging AI for analysis.

- What is the best AI for PDF chat?

There's no single "best" AI, but LangChain offers a framework to build a question-answering system for PDFs using OpenAI's LLM (or potentially other LLMs).

- Can ChatGPT analyze a PDF?

ChatGPT itself cannot directly analyze PDFs. You'd need to extract text or use tools like LangChain to bridge the gap.

- Is there an AI that can read PDF files?

While chatbots might not directly "read" PDFs for conversation, tools like LangChain can process PDFs and answer questions based on their content, leveraging AI for analysis.